The Price of Intelligence: Why Your Chatbot Has a Bigger Carbon Footprint Than Your Fridge

The Price of Intelligence: Why Your Chatbot Has a Bigger Carbon Footprint Than Your Fridge

“The mind is not a vessel to be filled but a fire to be kindled.”

Well, Plutarch ,the Greek Platonian philosopher, never paid an electricity bill for a data center.

Energy vs Intelligence: Tennis and Terawatts

Padel might be the weekend warrior’s sport of choice across Indonesia, but for the past two months, it’s been tennis that dominated the world stage. Between the French Open and Wimbledon, fans were treated to back-to-back showdowns of human endurance — none more epic than Carlos Alcaraz vs. Jannik Sinner.

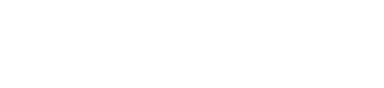

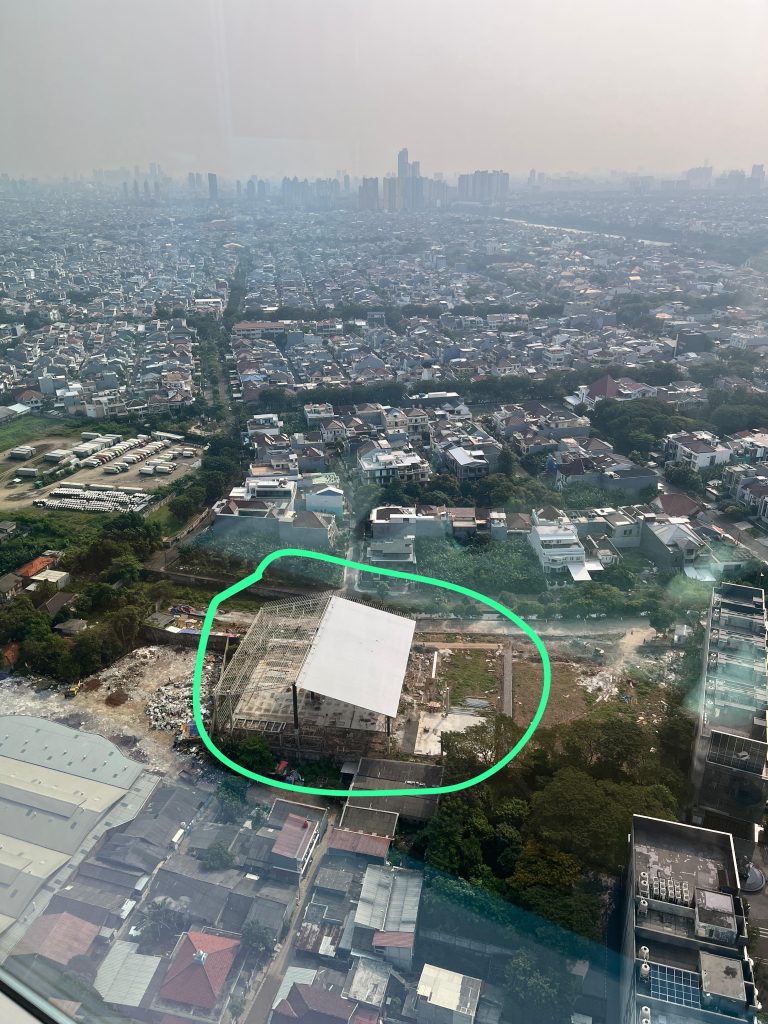

We weren’t kidding about the Jakarta padel craze. We spotted 2 courts being built less than 5km of each other

In the French Open, they pushed each other to five-and-a-half hours of clay-court cinema. Then came Wimbledon, where Sinner defeated Alcaraz in a tight 4-set final. These guys weren’t just hitting balls; they were burning 4,000+ calories, draining glycogen stores, chugging electrolyte shakes, and downing sushi rolls like it was an endurance buffet.

Carlos Alcoraz’s euphoric collapse after winning the French Open

Jannik Sinner’s well-deserved win for Wimbledon weeks after his loss against Carlos

All of this for one thing: to win.

Now imagine this: the energy they burned over five hours? Your AI assistant could rival that just generating a five-second video of a cat doing the Macarena.

Sport has its logic. AI? It just has inputs.

Ask, and Ye Shall Be Billed

We live in an age where asking a chatbot to write your best man’s speech is easier than asking your actual best man. The only thing easier? Forgetting that every witty AI response comes with a side order of carbon emissions and an electricity tab that would make a Bitcoin miner blush.

Here’s a stat to ruin your next AI-generated love poem: creating a single five-second AI-generated video consumes more energy than running a microwave for over an hour.

Remember when people said every Google search is like boiling a pot of water? Well, AI said, “Hold my beer.”

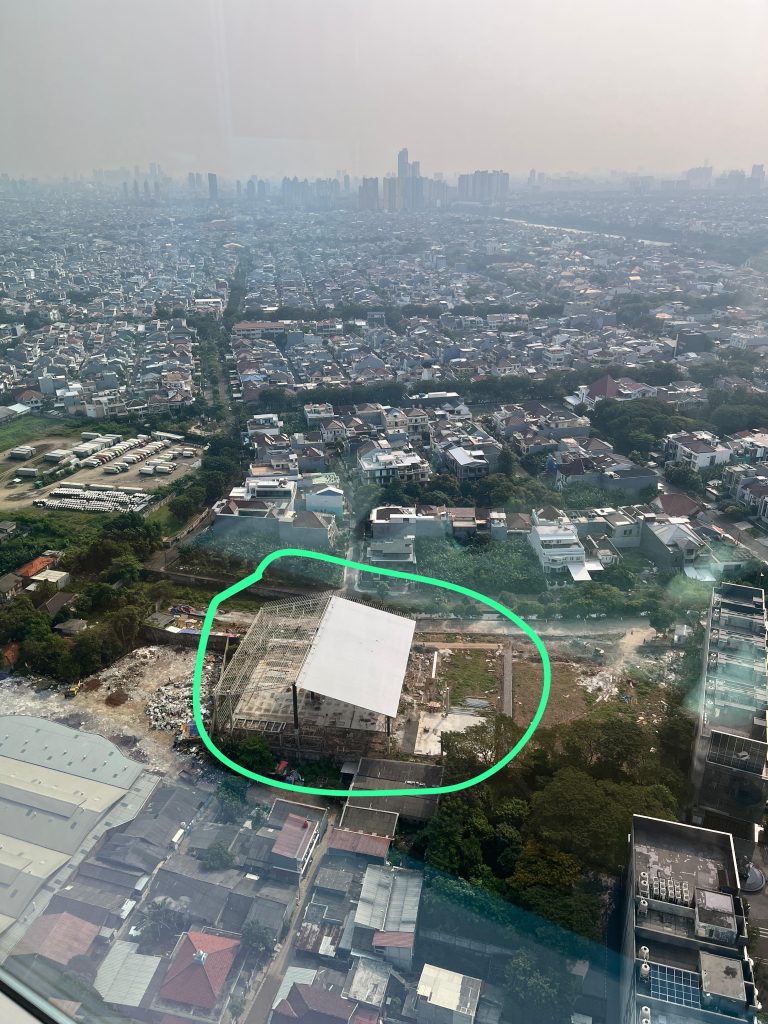

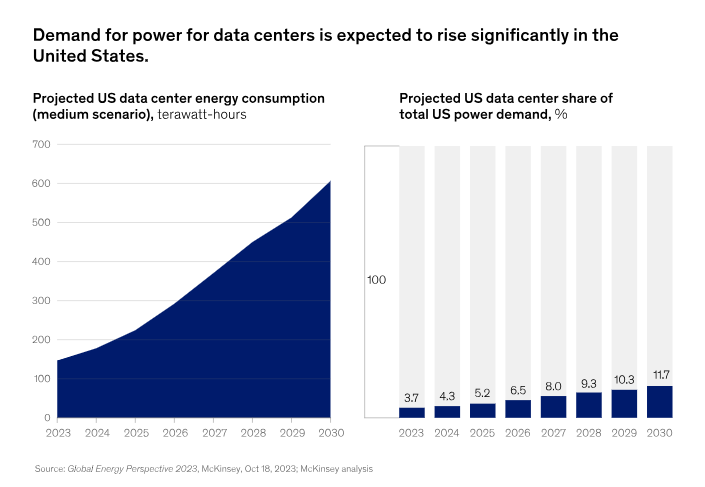

Source: McKinsey

One Token at a Time: The Physics of Thought

Unlike humans who can blurt out nonsense in bulk, large language models generate intelligence the way monks transcribe scripture — one token at a time. Literally.

Each word, each fragment of a sentence, each “uhm… actually”—is calculated, processed, and produced token by token. And each token? That’s energy. A lot of it.

Meta’s open-source model Llama 3.1 405B burns through 6,706 joules per response, enough to move you 400 feet on an e-bike or power your microwave for eight seconds.

And that’s just text. Want a picture of a sloth eating ramen on the moon? Double it. Want a video of that same sloth moonwalking while eating ramen? That’ll be 3.4 million joules, please.

We’re not generating intelligence anymore. We’re mining it.

From LOLs to Terawatts: The Rise of the Inference Empire

Training the model is expensive, sure — GPT-4 reportedly cost over $100 million to train, consuming enough energy to power San Francisco for three days.

But that’s just the beginning. Most of the energy burn comes after the model is trained. That’s the inference stage — the moment when you, the user, type “Explain inflation like I’m five” and the AI replies “Imagine you have 10 cookies and suddenly the price of cookies triples.”

That cute reply? Brought to you by thousands of GPUs, humming fans, and water-cooled server racks spread across sprawling data centers. As of 2025, inference accounts for 80–90% of AI’s computing power consumption.

⚡ The Coming Energy Crunch: AI Is Not Your Average App

Unlike traditional apps, which got more energy-efficient over time (thank you Moore’s Law), AI is like that kid who eats more and more every year and doesn’t stop growing.

The real kicker? AI’s energy demand is projected to rise so steeply that by 2028, it could consume as much electricity annually as 22% of all US households.

Meanwhile, most data centers are still powered by fossil fuels. Some AI companies are racing to build new nuclear power plants (seriously, Meta and Microsoft are trying), but those take time. In the meantime, expect more methane-powered generators and a few eyebrows from environmental regulators.

Enter: Aura Farming, But for Terawatts

In another corner of the internet, 11-year-old Rayyan Arkan Dikha, better known as Dika, has been dancing on the prow of a canoe during traditional boat races called “Pacu Jalur” in Riau. His charisma, sunglasses, and swagger sparked a global meme sensation: “aura farming.”

Dika with 1000x aura points

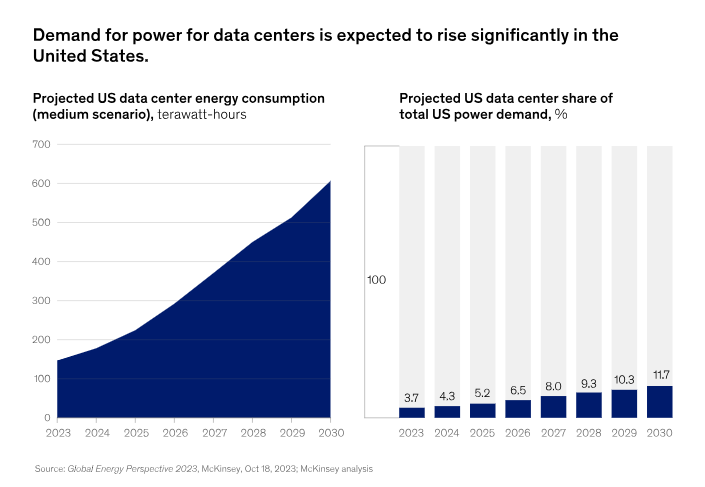

From K-pop bands like Enhypen, corporate companies like Duolingo, and world famous DJ Steve Aokie – the aura farming goes crazy

The dance has been recreated by everyone from Travis Kelce (star football player and also Taylor Swift’s boyfriend) to the Savannah Bananas baseball team. The phrase now refers to doing something cool, repetitive, and charismatic to build vibe capital — and Dika’s doing it without touching a watt.

Meanwhile, our digital models farm aura a little differently: by torching through megawatts.

So the question is: which aura is more sustainable?

One is rooted in tradition, community, and culture. The other? Burned into silicon and powered by a carbon-heavy grid.

Decoding the Compute Layer: The Cost of Brains in the Cloud

Let’s break this down with our Heyokha lens: in our framework of ABC — AI, Blockchain, Compute — compute is the often-forgotten but absolutely vital third sibling. We’ve touched on the “A” and “B” factors in our latest blogs It’s the protein shake behind AI’s intellectual six-pack.

And compute, quite literally, means energy, chips, servers, water, and real estate.

- Want smarter AI? You need bigger models, which means more parameters and more chips.

- More chips? You need more cooling. Some data centers are guzzling millions of gallons of water per day just to keep it all from melting down.

- And unless someone invents an energy-efficient way to hallucinate cat memes, we’re going to need a lot more juice.

The Unintended Consequences: When Chatbots Demand Power Plants

The trend is clear: every company wants to “AI-enable” everything. From your fridge recommending recipes to your Excel sheet auto-analyzing Q3 earnings.

But this intelligence arms race comes at a steep cost. As AI gets baked into everything, it threatens to reshape our energy grids, strain our infrastructure, and increase your electricity bill.

Yes — utility companies are already striking deals with data centers that may pass on higher energy costs to you and me. In Virginia, the average ratepayer could pay an extra $37.50/month thanks to data center expansion.

But the cost isn’t just measured in dollars.

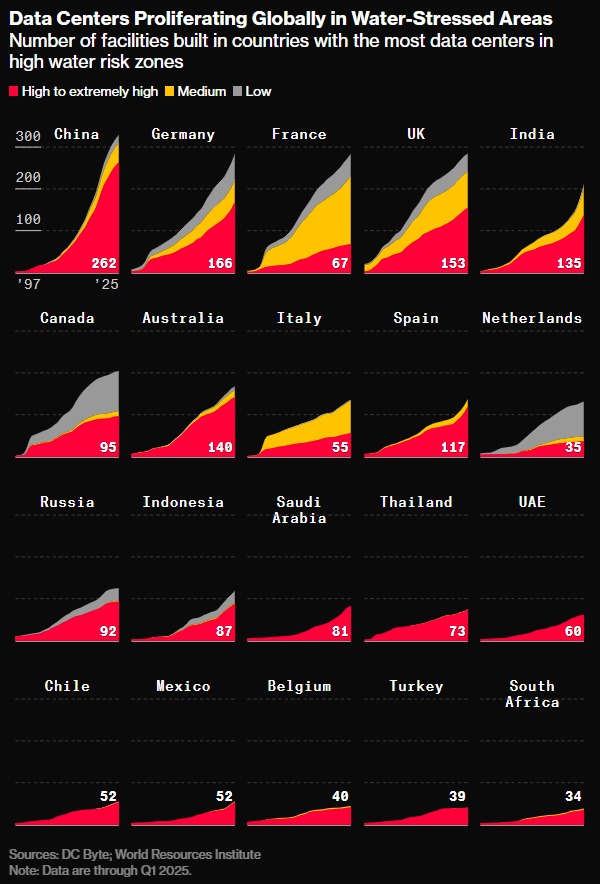

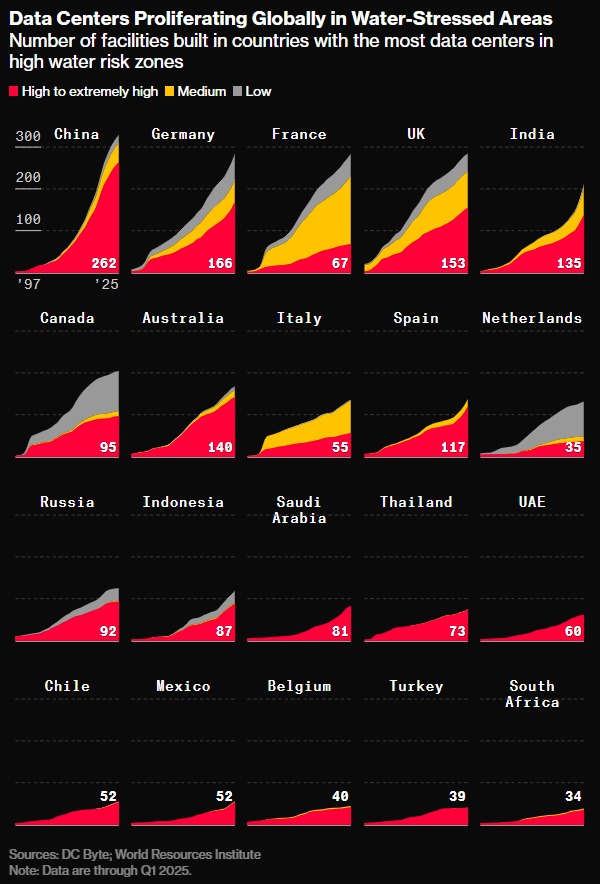

Water, the original cooling tech, is now a silent casualty of the AI revolution. According to Bloomberg, about two-thirds of new AI data centers since 2022 have been built in water-stressed regions, from Arizona to India to the UAE. A single 100-megawatt facility — enough to power 75,000 homes — can use 2 million liters of water per day, or the equivalent of 6,500 households’ daily water needs.

Water-stressed areas see the most growth for data centers to be built

Source: Bloomberg

As more data centers rely on evaporative cooling systems, often using fresh or even potable water, communities from Texas to the Netherlands have begun protesting. Because while servers need cooling, so do crops, households, and ecosystems.

So when your smart speaker tells you a joke, just know you might be paying for it twice: once in laughs, and once in kilowatt-hours.

So What?

We’re not here to be anti-AI. In fact, we’re bullish on the productivity and innovation it can unlock. But as investors, observers, and humans who still like forests and breathable air, we should ask:

- Who benefits from this AI-driven energy boom?

- Which companies are selling the shovels in this new “intelligence gold rush”?

- Will AI be the catalyst that accelerates nuclear adoption, or will it deepen our dependence on gas?

As we see it, energy is quickly becoming the new strategic battleground for intelligence. Not just oil for tanks — but watts for bots.

Tara Mulia

Admin heyokha

Share

“The mind is not a vessel to be filled but a fire to be kindled.”

Well, Plutarch ,the Greek Platonian philosopher, never paid an electricity bill for a data center.

Energy vs Intelligence: Tennis and Terawatts

Padel might be the weekend warrior’s sport of choice across Indonesia, but for the past two months, it’s been tennis that dominated the world stage. Between the French Open and Wimbledon, fans were treated to back-to-back showdowns of human endurance — none more epic than Carlos Alcaraz vs. Jannik Sinner.

We weren’t kidding about the Jakarta padel craze. We spotted 2 courts being built less than 5km of each other

In the French Open, they pushed each other to five-and-a-half hours of clay-court cinema. Then came Wimbledon, where Sinner defeated Alcaraz in a tight 4-set final. These guys weren’t just hitting balls; they were burning 4,000+ calories, draining glycogen stores, chugging electrolyte shakes, and downing sushi rolls like it was an endurance buffet.

Carlos Alcoraz’s euphoric collapse after winning the French Open

Jannik Sinner’s well-deserved win for Wimbledon weeks after his loss against Carlos

All of this for one thing: to win.

Now imagine this: the energy they burned over five hours? Your AI assistant could rival that just generating a five-second video of a cat doing the Macarena.

Sport has its logic. AI? It just has inputs.

Ask, and Ye Shall Be Billed

We live in an age where asking a chatbot to write your best man’s speech is easier than asking your actual best man. The only thing easier? Forgetting that every witty AI response comes with a side order of carbon emissions and an electricity tab that would make a Bitcoin miner blush.

Here’s a stat to ruin your next AI-generated love poem: creating a single five-second AI-generated video consumes more energy than running a microwave for over an hour.

Remember when people said every Google search is like boiling a pot of water? Well, AI said, “Hold my beer.”

Source: McKinsey

One Token at a Time: The Physics of Thought

Unlike humans who can blurt out nonsense in bulk, large language models generate intelligence the way monks transcribe scripture — one token at a time. Literally.

Each word, each fragment of a sentence, each “uhm… actually”—is calculated, processed, and produced token by token. And each token? That’s energy. A lot of it.

Meta’s open-source model Llama 3.1 405B burns through 6,706 joules per response, enough to move you 400 feet on an e-bike or power your microwave for eight seconds.

And that’s just text. Want a picture of a sloth eating ramen on the moon? Double it. Want a video of that same sloth moonwalking while eating ramen? That’ll be 3.4 million joules, please.

We’re not generating intelligence anymore. We’re mining it.

From LOLs to Terawatts: The Rise of the Inference Empire

Training the model is expensive, sure — GPT-4 reportedly cost over $100 million to train, consuming enough energy to power San Francisco for three days.

But that’s just the beginning. Most of the energy burn comes after the model is trained. That’s the inference stage — the moment when you, the user, type “Explain inflation like I’m five” and the AI replies “Imagine you have 10 cookies and suddenly the price of cookies triples.”

That cute reply? Brought to you by thousands of GPUs, humming fans, and water-cooled server racks spread across sprawling data centers. As of 2025, inference accounts for 80–90% of AI’s computing power consumption.

⚡ The Coming Energy Crunch: AI Is Not Your Average App

Unlike traditional apps, which got more energy-efficient over time (thank you Moore’s Law), AI is like that kid who eats more and more every year and doesn’t stop growing.

The real kicker? AI’s energy demand is projected to rise so steeply that by 2028, it could consume as much electricity annually as 22% of all US households.

Meanwhile, most data centers are still powered by fossil fuels. Some AI companies are racing to build new nuclear power plants (seriously, Meta and Microsoft are trying), but those take time. In the meantime, expect more methane-powered generators and a few eyebrows from environmental regulators.

Enter: Aura Farming, But for Terawatts

In another corner of the internet, 11-year-old Rayyan Arkan Dikha, better known as Dika, has been dancing on the prow of a canoe during traditional boat races called “Pacu Jalur” in Riau. His charisma, sunglasses, and swagger sparked a global meme sensation: “aura farming.”

Dika with 1000x aura points

From K-pop bands like Enhypen, corporate companies like Duolingo, and world famous DJ Steve Aokie – the aura farming goes crazy

The dance has been recreated by everyone from Travis Kelce (star football player and also Taylor Swift’s boyfriend) to the Savannah Bananas baseball team. The phrase now refers to doing something cool, repetitive, and charismatic to build vibe capital — and Dika’s doing it without touching a watt.

Meanwhile, our digital models farm aura a little differently: by torching through megawatts.

So the question is: which aura is more sustainable?

One is rooted in tradition, community, and culture. The other? Burned into silicon and powered by a carbon-heavy grid.

Decoding the Compute Layer: The Cost of Brains in the Cloud

Let’s break this down with our Heyokha lens: in our framework of ABC — AI, Blockchain, Compute — compute is the often-forgotten but absolutely vital third sibling. We’ve touched on the “A” and “B” factors in our latest blogs It’s the protein shake behind AI’s intellectual six-pack.

And compute, quite literally, means energy, chips, servers, water, and real estate.

- Want smarter AI? You need bigger models, which means more parameters and more chips.

- More chips? You need more cooling. Some data centers are guzzling millions of gallons of water per day just to keep it all from melting down.

- And unless someone invents an energy-efficient way to hallucinate cat memes, we’re going to need a lot more juice.

The Unintended Consequences: When Chatbots Demand Power Plants

The trend is clear: every company wants to “AI-enable” everything. From your fridge recommending recipes to your Excel sheet auto-analyzing Q3 earnings.

But this intelligence arms race comes at a steep cost. As AI gets baked into everything, it threatens to reshape our energy grids, strain our infrastructure, and increase your electricity bill.

Yes — utility companies are already striking deals with data centers that may pass on higher energy costs to you and me. In Virginia, the average ratepayer could pay an extra $37.50/month thanks to data center expansion.

But the cost isn’t just measured in dollars.

Water, the original cooling tech, is now a silent casualty of the AI revolution. According to Bloomberg, about two-thirds of new AI data centers since 2022 have been built in water-stressed regions, from Arizona to India to the UAE. A single 100-megawatt facility — enough to power 75,000 homes — can use 2 million liters of water per day, or the equivalent of 6,500 households’ daily water needs.

Water-stressed areas see the most growth for data centers to be built

Source: Bloomberg

As more data centers rely on evaporative cooling systems, often using fresh or even potable water, communities from Texas to the Netherlands have begun protesting. Because while servers need cooling, so do crops, households, and ecosystems.

So when your smart speaker tells you a joke, just know you might be paying for it twice: once in laughs, and once in kilowatt-hours.

So What?

We’re not here to be anti-AI. In fact, we’re bullish on the productivity and innovation it can unlock. But as investors, observers, and humans who still like forests and breathable air, we should ask:

- Who benefits from this AI-driven energy boom?

- Which companies are selling the shovels in this new “intelligence gold rush”?

- Will AI be the catalyst that accelerates nuclear adoption, or will it deepen our dependence on gas?

As we see it, energy is quickly becoming the new strategic battleground for intelligence. Not just oil for tanks — but watts for bots.

Tara Mulia

Admin heyokha

Share