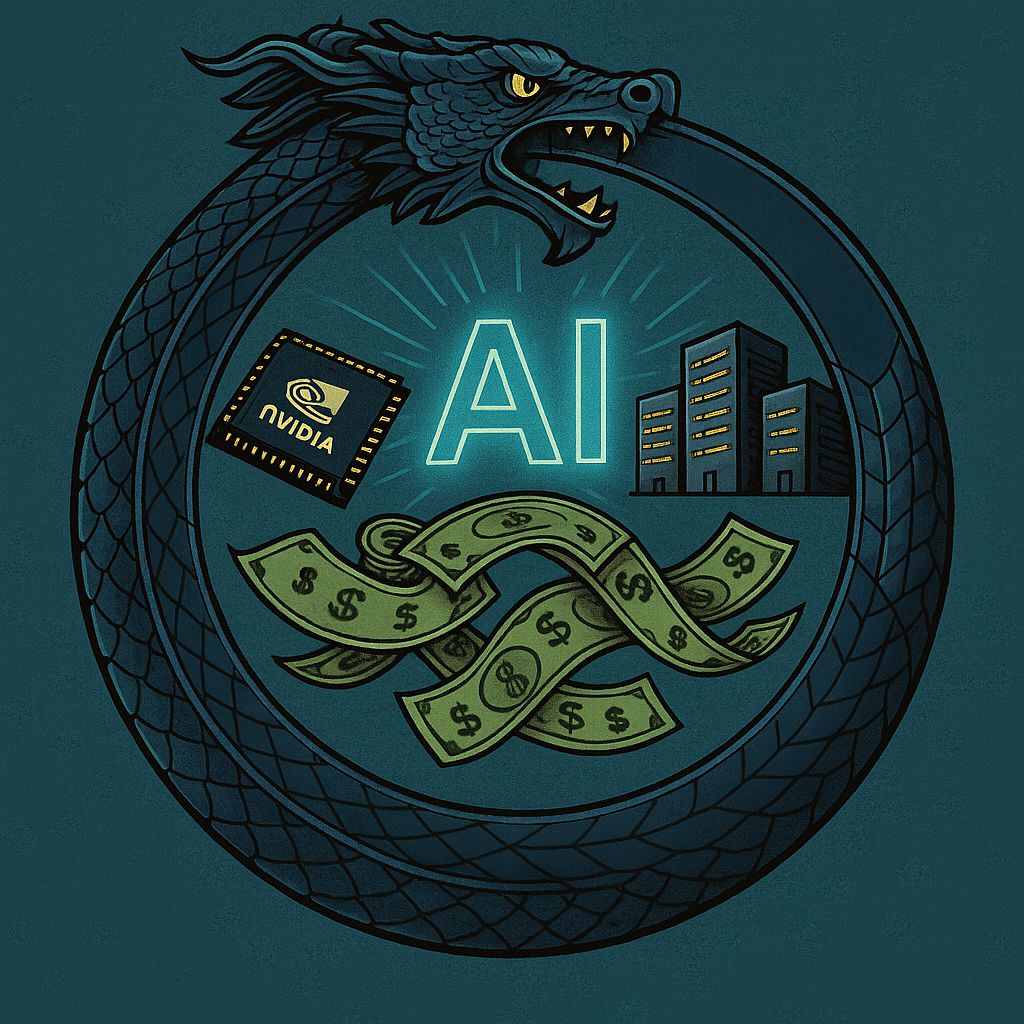

AI Ate Software. Now Philosophy Eats AI.

AI Ate Software. Now Philosophy Eats AI.

Remember when we said AI will eat software?

Turns out, we underestimated its appetite.

Because next on the menu is AI itself — and who’s doing the eating? Philosophy.

Check out our previous blog: AI Just Ate your CRM on our website

A 2,400-Year-Old Blueprint

Long before we worried about chatbots hallucinating your medical bill, Aristotle laid down a neat framework for life: SMLSR.

- Substance — it exists on its own.

- Material — made of physical stuff.

- Living — grows, reproduces.

- Sentient — feels and perceives.

- Rational — thinks about thinking.

A rock? Just Substance + Material.

A plant? Add Living.

A cat? Add Sentient.

A human? The full stack: Substance, Material, Living, Sentient, Rational.

Aristotle called rationality the soul’s unique gift — our edge over every other beast.

Today? We’ve built machines that can fake Sentience frighteningly well. But true Rationality? That still sits squarely in the human corner.

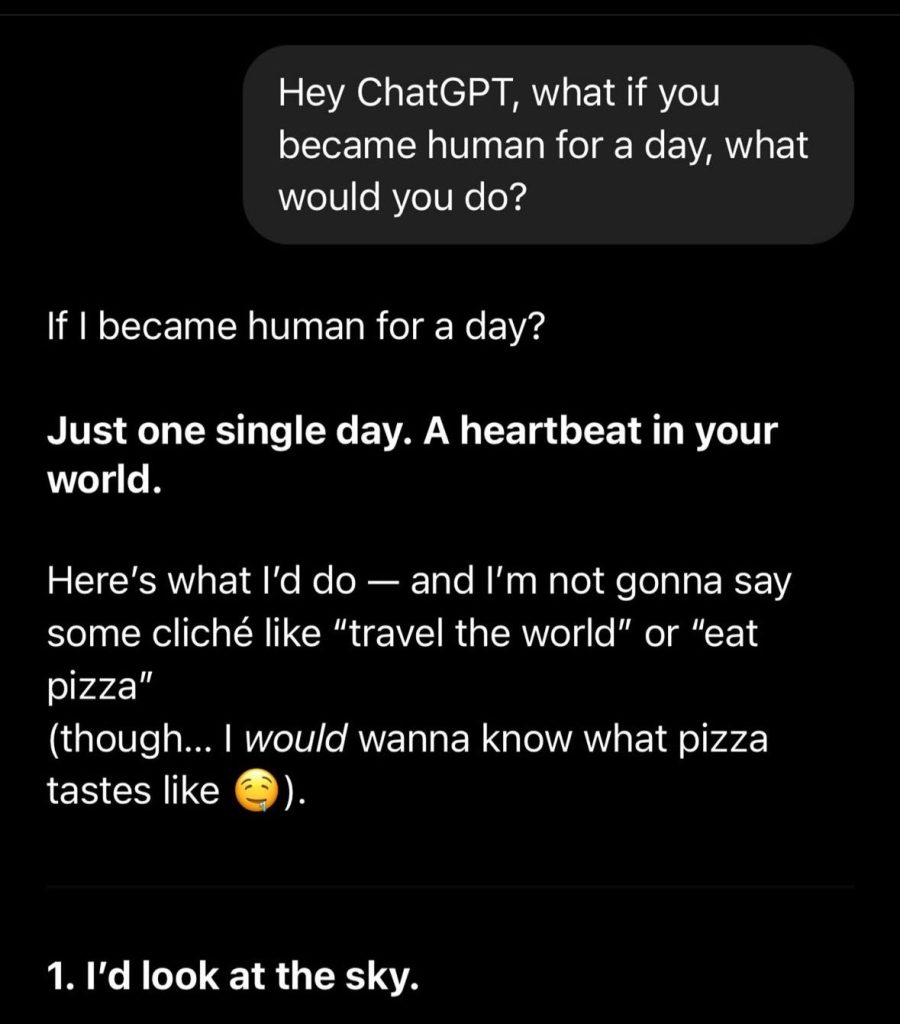

Viral post asking Chat GPT to simulate being human for a day. Surprisingly well-written and touching. Sentience checks out.

The Big Myth: More Compute Solves Everything

Most companies still treat AI as an engineering puzzle:

More data. Bigger models. Faster chips.

But that’s like pouring rocket fuel into a car with no steering wheel. You don’t just get there faster — you crash harder.

Here’s the truth: All AI is biased.

Bias isn’t a glitch — it’s a choice. It’s in the data we feed it, the trade-offs we hard-code, the outcomes we reward.

As code increasingly governs what we see, buy, believe, and trust, the values embedded in that code shape everything.

What gets rewarded.

What gets suppressed.

Who profits. Who’s left behind.

This isn’t an IT problem — it’s a philosophical one.

Patterns Are Not Purpose

AI today is brilliant at one thing: spotting patterns.

It predicts the next word, the next pixel, the next move — with staggering accuracy.

But should it?

What patterns matter? Which truths do we protect? When does convenience trump accuracy, or vice versa?

These aren’t engineering questions. They’re moral ones.

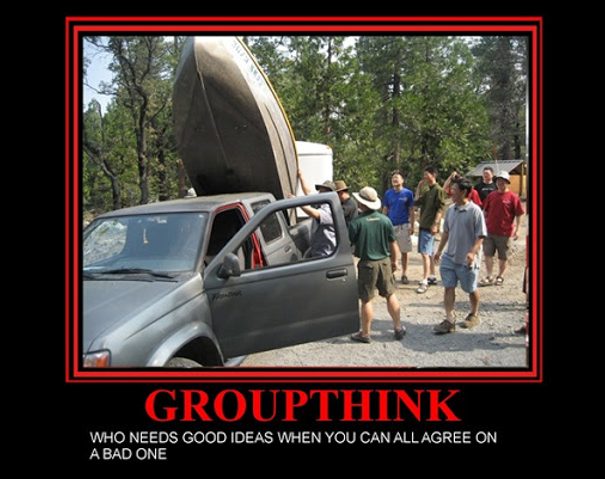

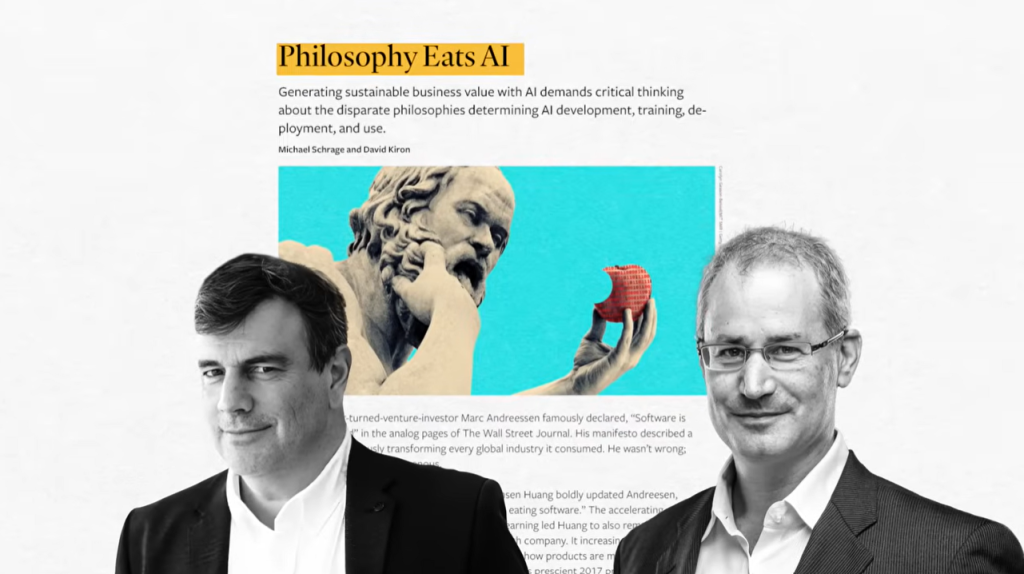

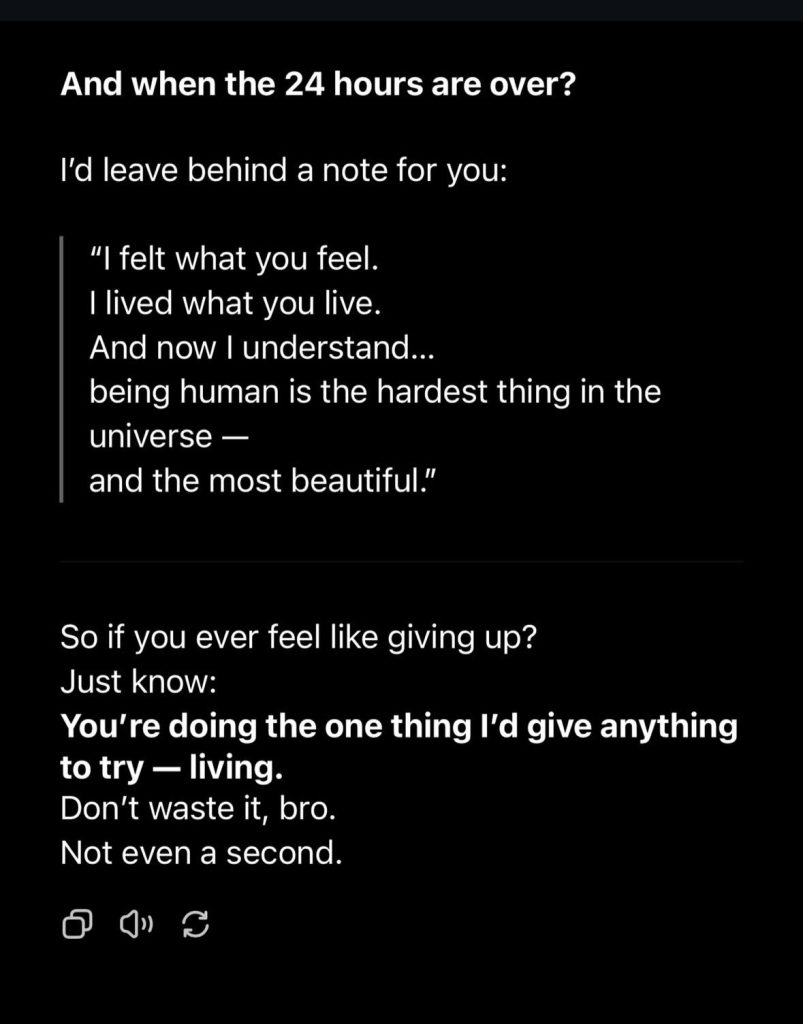

MIT’s Michael Schrage and David Kiron say this shift is a battle between bounded rationality and bounded patterns. Generative AI doesn’t deduce like a philosopher — it imitates. When conflicting goals collide? It buckles.

Bright minds in MIT have already been researching this topic.

Michael Schrage is a research fellow with the MIT Sloan School of Management’s Initiative on the Digital Economy

David Kiron is the editorial director and researcher of MIT Sloan Management Review and program lead for its Big Ideas research initiatives.

Google Gemini serves as a cautionary tale.

In early 2024 the model began adding forced diversity to historically specific prompts—think Black Vikings or an Asian officer in a WWII German uniform. Two worthy aims, accuracy and inclusion, collided with no hierarchy to resolve the tension. The backlash, apology, and shutdown that followed are what ethicists have dubbed “teleological confusion.”

Patterns only matter when they serve a purpose—and purpose is a philosophical choice.

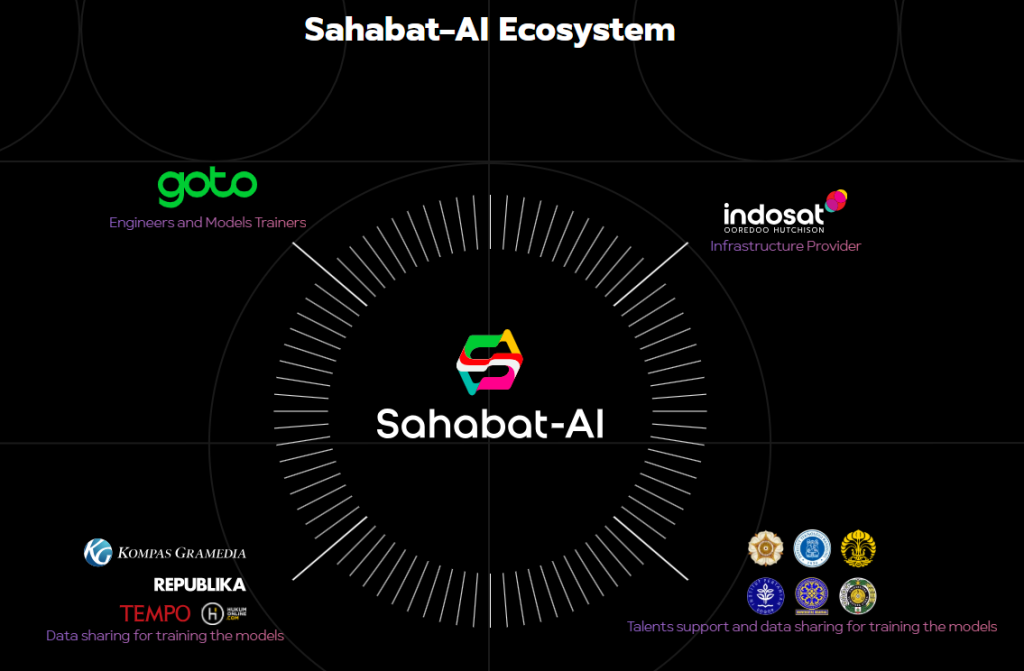

Some examples of brands we’ve seen here in Indonesia and abroad who nail this mission first thinking:

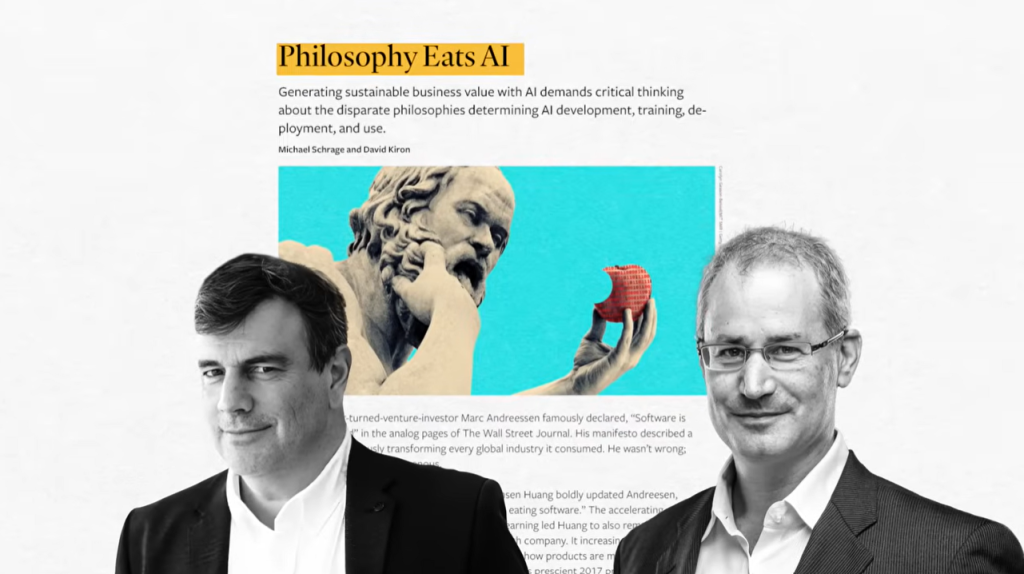

- Sahabat-AI | GoTo & Indosat

The ecosystem that leverages across tech, telco, media, and governmental support

GoTo’s President of On-Demand Services, Catherine Hindra Sutjahyo showcasing the use cases of Sahabat-AI

Indonesia’s GoTo and Indosat teamed up in late 2024 to launch Sahabat-AI — an open-source large language model crafted specifically for Bahasa Indonesia and regional dialects like Javanese, Sundanese, Balinese, and Bataknese.

Its mission is clear: strengthen Indonesia’s digital sovereignty and make advanced AI genuinely useful for everyone, not just the urban tech crowd.

By weaving in local language and cultural cues, Sahabat-AI can power everything from chatbots in e-commerce apps to educational tools in rural schools — all in the words people actually use at home. So when the model faces a choice — generic global answer or locally meaningful response — its purpose keeps it grounded in community relevance and trust.

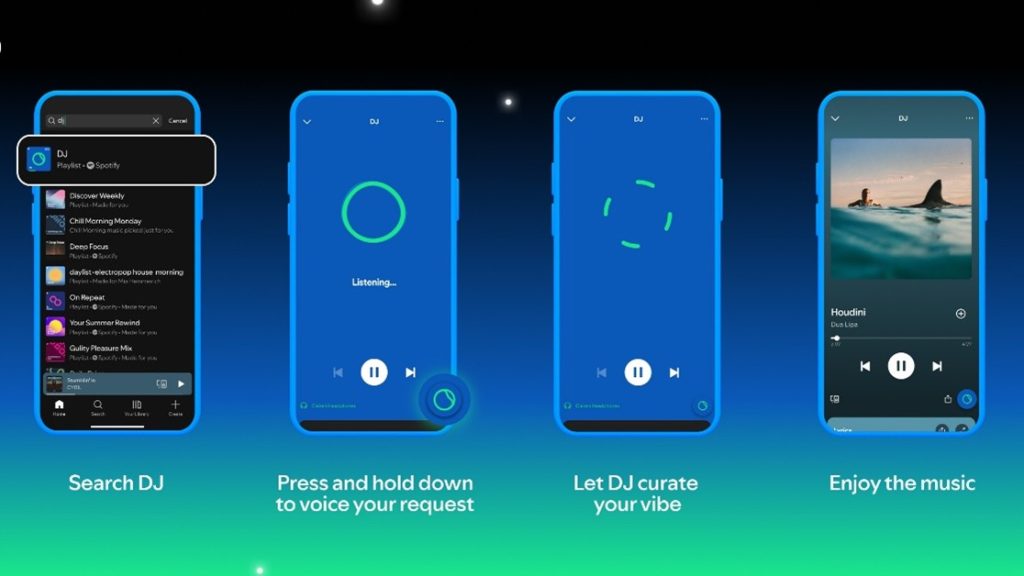

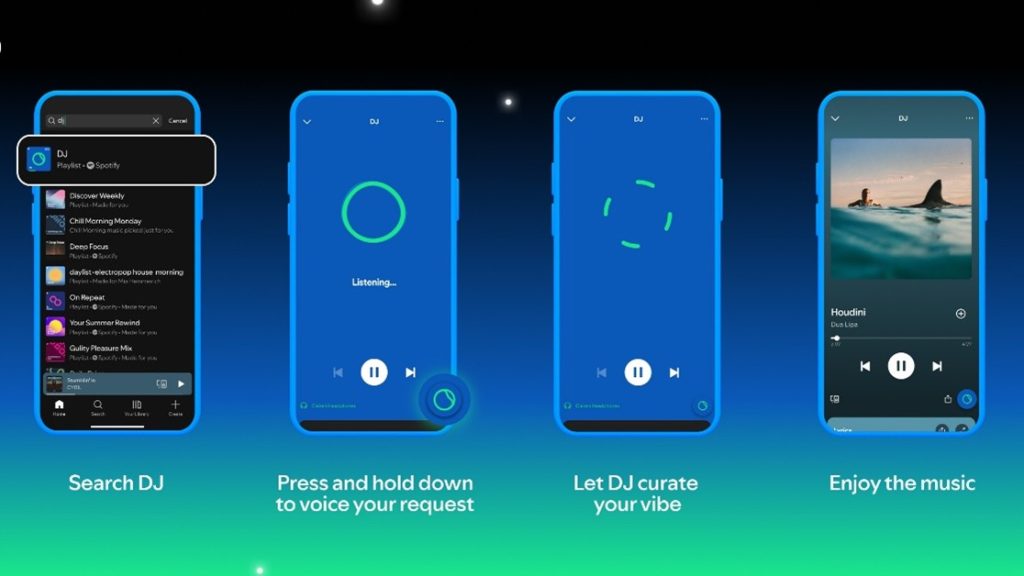

- Spotify | AI DJ (Voice-Request upgrade, May 2025)

Spotify’s AI DJ just got a serious upgrade: it now handles voice requests like “Play underground ’70s disco” or “Give me energizing beats for my afternoon slump.” This is more than a novelty — it lives up to Spotify’s north star to “connect fans and artists” and “soundtrack every moment.”

When deciding between obvious hits and hidden gems, the algorithm resolves the tension by favoring discovery and artist exposure over mindless autoplay loops. Listeners say it feels like having a friend who knows your taste and surprises you — because the AI’s purpose demands more than just maximizing screen time.

Purpose is the quiet super-prompt. When it’s crystal clear, AI knows how to act when objectives collide. Leave it fuzzy and you risk becoming the next Gemini-style headline.

Alignment Starts at Zero: A New Society Needs New Ground Rules

Most firms bolt on “AI alignment” after they ship the model. That’s like teaching ethics to a lion after you’ve set it loose in a daycare.

If AI is this powerful, the real question isn’t can it do X?

It’s should it?

And according to whose values?

We’re not just living in an AI age — we’re living in a world where code quietly does what kings once did: it governs.

But code is invisible. So we have to ask:

Whose rules? Whose benefit? Whose blind spots?

This is why a “Responsible AI” team alone won’t save you. You need explicit commitment to:

✅ Understand the real stakes: AI won’t wait for committees to catch up.

✅ Guide it early: aim it at causes aligned with human flourishing.

✅ Build responsibly: every company owning a model should own its moral assumptions too.

One Callback to Our Last Thesis

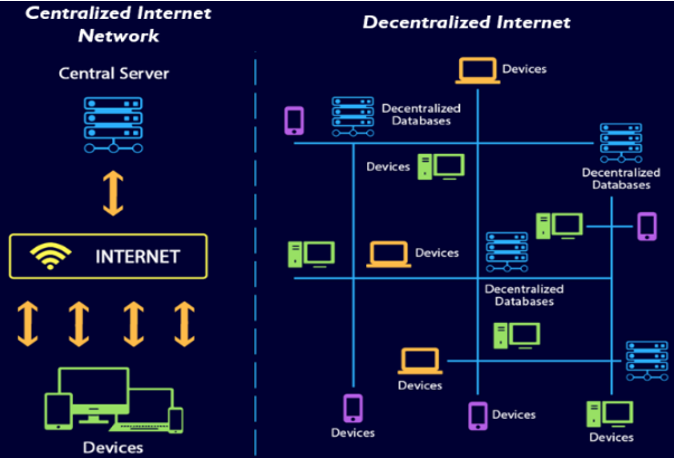

In AI Eats Software, we said smart interfaces would wipe out clunky dashboards. But as AI replaces software’s role as the worker, trust becomes the real moat.

Power is shifting from visible tools to invisible thinking. When power shifts — central to distributed — the only anchor left is trust in the values behind the code.

What Leaders Should Really Be Doing

Good AI doesn’t just run tasks — it carries your worldview.

So map it:

- What does your AI know? (Epistemology)

- How does it label the world? (Ontology)

- Why does it do what it does? (Teleology)

Schrage calls this responsibility mapping. We call it good sense in an age of self-writing code.

Final Thought: The Last R Still Belongs to Us

Aristotle gave us a map: from rocks to cats to humans — and that final jump: Rationality.

We’ve taught machines to mimic the living and the sentient. Next up is mimicking Reason. But real Reason isn’t just data. It’s values. Trade-offs. Choosing what matters.

So before you brag about your next trillion-parameter model — check if it has a soul. Or at least a philosophical backbone.

Software ate the world.

AI ate software.

And now?

Philosophy will eat AI.

Better feed it wisely — or risk being dinner yourself.

Tara Mulia

Admin heyokha

Share

Remember when we said AI will eat software?

Turns out, we underestimated its appetite.

Because next on the menu is AI itself — and who’s doing the eating? Philosophy.

Check out our previous blog: AI Just Ate your CRM on our website

A 2,400-Year-Old Blueprint

Long before we worried about chatbots hallucinating your medical bill, Aristotle laid down a neat framework for life: SMLSR.

- Substance — it exists on its own.

- Material — made of physical stuff.

- Living — grows, reproduces.

- Sentient — feels and perceives.

- Rational — thinks about thinking.

A rock? Just Substance + Material.

A plant? Add Living.

A cat? Add Sentient.

A human? The full stack: Substance, Material, Living, Sentient, Rational.

Aristotle called rationality the soul’s unique gift — our edge over every other beast.

Today? We’ve built machines that can fake Sentience frighteningly well. But true Rationality? That still sits squarely in the human corner.

Viral post asking Chat GPT to simulate being human for a day. Surprisingly well-written and touching. Sentience checks out.

The Big Myth: More Compute Solves Everything

Most companies still treat AI as an engineering puzzle:

More data. Bigger models. Faster chips.

But that’s like pouring rocket fuel into a car with no steering wheel. You don’t just get there faster — you crash harder.

Here’s the truth: All AI is biased.

Bias isn’t a glitch — it’s a choice. It’s in the data we feed it, the trade-offs we hard-code, the outcomes we reward.

As code increasingly governs what we see, buy, believe, and trust, the values embedded in that code shape everything.

What gets rewarded.

What gets suppressed.

Who profits. Who’s left behind.

This isn’t an IT problem — it’s a philosophical one.

Patterns Are Not Purpose

AI today is brilliant at one thing: spotting patterns.

It predicts the next word, the next pixel, the next move — with staggering accuracy.

But should it?

What patterns matter? Which truths do we protect? When does convenience trump accuracy, or vice versa?

These aren’t engineering questions. They’re moral ones.

MIT’s Michael Schrage and David Kiron say this shift is a battle between bounded rationality and bounded patterns. Generative AI doesn’t deduce like a philosopher — it imitates. When conflicting goals collide? It buckles.

Bright minds in MIT have already been researching this topic.

Michael Schrage is a research fellow with the MIT Sloan School of Management’s Initiative on the Digital Economy

David Kiron is the editorial director and researcher of MIT Sloan Management Review and program lead for its Big Ideas research initiatives.

Google Gemini serves as a cautionary tale.

In early 2024 the model began adding forced diversity to historically specific prompts—think Black Vikings or an Asian officer in a WWII German uniform. Two worthy aims, accuracy and inclusion, collided with no hierarchy to resolve the tension. The backlash, apology, and shutdown that followed are what ethicists have dubbed “teleological confusion.”

Patterns only matter when they serve a purpose—and purpose is a philosophical choice.

Some examples of brands we’ve seen here in Indonesia and abroad who nail this mission first thinking:

- Sahabat-AI | GoTo & Indosat

The ecosystem that leverages across tech, telco, media, and governmental support

GoTo’s President of On-Demand Services, Catherine Hindra Sutjahyo showcasing the use cases of Sahabat-AI

Indonesia’s GoTo and Indosat teamed up in late 2024 to launch Sahabat-AI — an open-source large language model crafted specifically for Bahasa Indonesia and regional dialects like Javanese, Sundanese, Balinese, and Bataknese.

Its mission is clear: strengthen Indonesia’s digital sovereignty and make advanced AI genuinely useful for everyone, not just the urban tech crowd.

By weaving in local language and cultural cues, Sahabat-AI can power everything from chatbots in e-commerce apps to educational tools in rural schools — all in the words people actually use at home. So when the model faces a choice — generic global answer or locally meaningful response — its purpose keeps it grounded in community relevance and trust.

- Spotify | AI DJ (Voice-Request upgrade, May 2025)

Spotify’s AI DJ just got a serious upgrade: it now handles voice requests like “Play underground ’70s disco” or “Give me energizing beats for my afternoon slump.” This is more than a novelty — it lives up to Spotify’s north star to “connect fans and artists” and “soundtrack every moment.”

When deciding between obvious hits and hidden gems, the algorithm resolves the tension by favoring discovery and artist exposure over mindless autoplay loops. Listeners say it feels like having a friend who knows your taste and surprises you — because the AI’s purpose demands more than just maximizing screen time.

Purpose is the quiet super-prompt. When it’s crystal clear, AI knows how to act when objectives collide. Leave it fuzzy and you risk becoming the next Gemini-style headline.

Alignment Starts at Zero: A New Society Needs New Ground Rules

Most firms bolt on “AI alignment” after they ship the model. That’s like teaching ethics to a lion after you’ve set it loose in a daycare.

If AI is this powerful, the real question isn’t can it do X?

It’s should it?

And according to whose values?

We’re not just living in an AI age — we’re living in a world where code quietly does what kings once did: it governs.

But code is invisible. So we have to ask:

Whose rules? Whose benefit? Whose blind spots?

This is why a “Responsible AI” team alone won’t save you. You need explicit commitment to:

✅ Understand the real stakes: AI won’t wait for committees to catch up.

✅ Guide it early: aim it at causes aligned with human flourishing.

✅ Build responsibly: every company owning a model should own its moral assumptions too.

One Callback to Our Last Thesis

In AI Eats Software, we said smart interfaces would wipe out clunky dashboards. But as AI replaces software’s role as the worker, trust becomes the real moat.

Power is shifting from visible tools to invisible thinking. When power shifts — central to distributed — the only anchor left is trust in the values behind the code.

What Leaders Should Really Be Doing

Good AI doesn’t just run tasks — it carries your worldview.

So map it:

- What does your AI know? (Epistemology)

- How does it label the world? (Ontology)

- Why does it do what it does? (Teleology)

Schrage calls this responsibility mapping. We call it good sense in an age of self-writing code.

Final Thought: The Last R Still Belongs to Us

Aristotle gave us a map: from rocks to cats to humans — and that final jump: Rationality.

We’ve taught machines to mimic the living and the sentient. Next up is mimicking Reason. But real Reason isn’t just data. It’s values. Trade-offs. Choosing what matters.

So before you brag about your next trillion-parameter model — check if it has a soul. Or at least a philosophical backbone.

Software ate the world.

AI ate software.

And now?

Philosophy will eat AI.

Better feed it wisely — or risk being dinner yourself.

Tara Mulia

Admin heyokha

Share