The Next AI Moat: Built in Gigawatts, Not Just Code

The Next AI Moat: Built in Gigawatts, Not Just Code

When people talk about artificial intelligence, the conversation usually drifts into abstraction including but not limited to models, tokens, algorithms, cognition. But underneath all that magic is a very physical reality: silicon chips, cooling fans, thousands of kilometres of fibre optic cables, and megawatts of electricity coursing through humming data centres.

In fact, as 2025 unfolds, AI is no longer just a story of breakthroughs. It’s a story of build-outs. And increasingly, the real competitive edge doesn’t lie in model architecture or training techniques, but in something much simpler: who can scale electricity, chips, and infrastructure faster.

When Data Meets Voltage

AI carried U.S. equities in Q3. The sector now accounts for nearly 80% of the S&P 500’s year-to-date gains. The capital intensity is staggering: AI-related investments contributed nearly 40% of U.S. GDP growth in 2025. Nvidia’s rally may get the headlines, but the unsung hero of this boom is infrastructure.

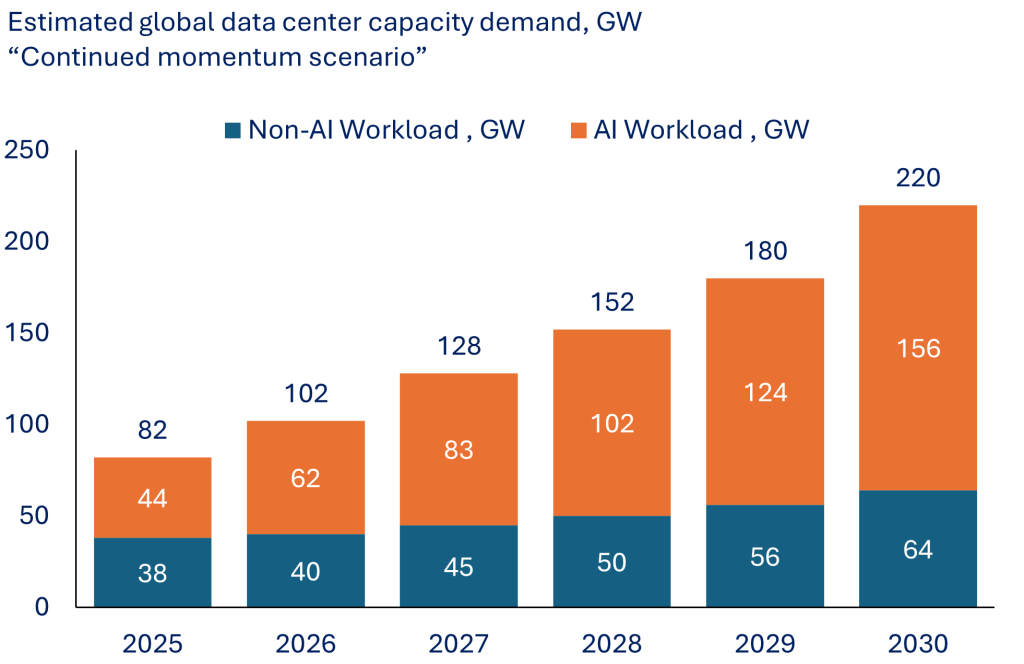

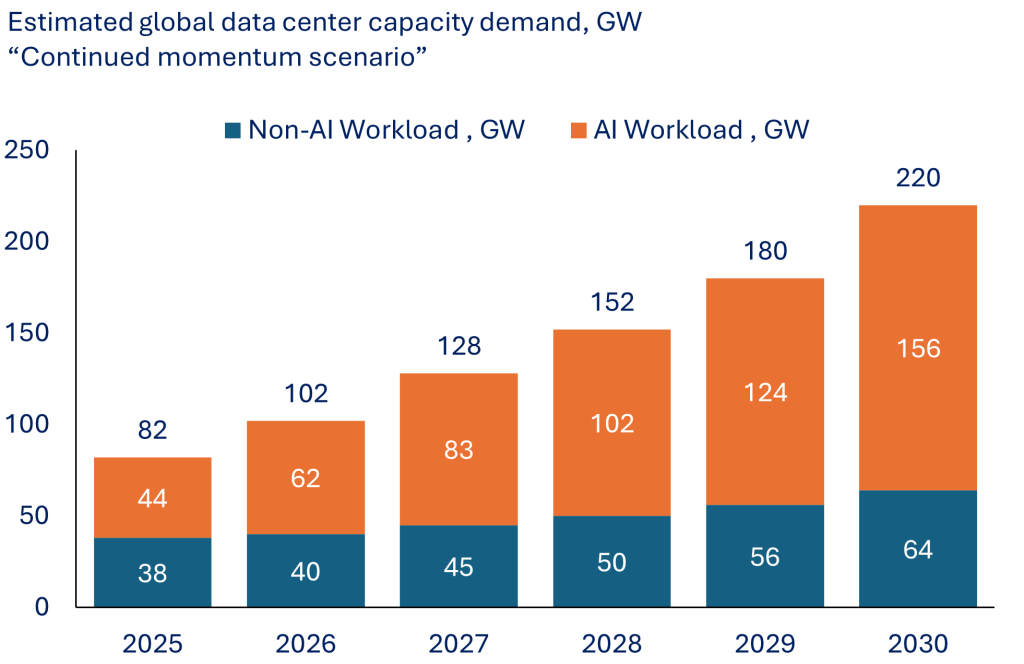

Global computing capacity is set to expand massively

Source: McKinsey

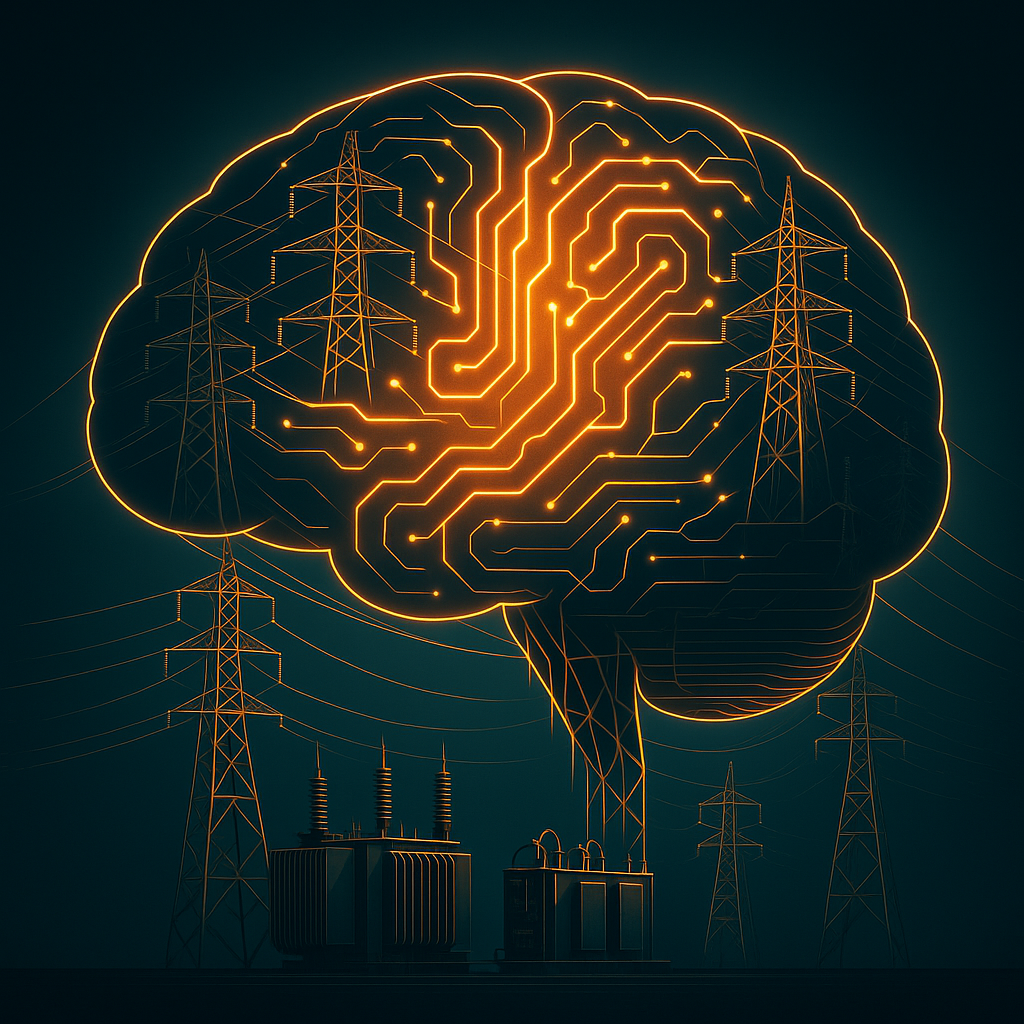

Data centres alone are projected to consume 1,600 TWh of electricity by 2035, which is the equivalent to 4.4% of global power use. That would make “AI” the world’s fourth-largest electricity consumer behind the U.S., China, and India.

Compute demand is now doubling every 100 days. Power, water, land, and transmission capacity are no longer cost inputs. They are hard constraints.

Gridlock vs. Greenlight: The U.S. Grid Struggles as China Powers Ahead

This shift is already causing cracks. Microsoft recently admitted that power has become “the biggest issue” for future data centre builds. Amazon is flagging grid shortages, even as hyperscaler capex is projected to jump from US$370 billion in 2025 to US$470 billion in 2026.

In response, U.S. tech giants are building their own generators just to keep expansion plans alive. But between fragmented state grids and a politicised permitting system, scaling energy capacity in America is looking less like innovation and more like improvisation.

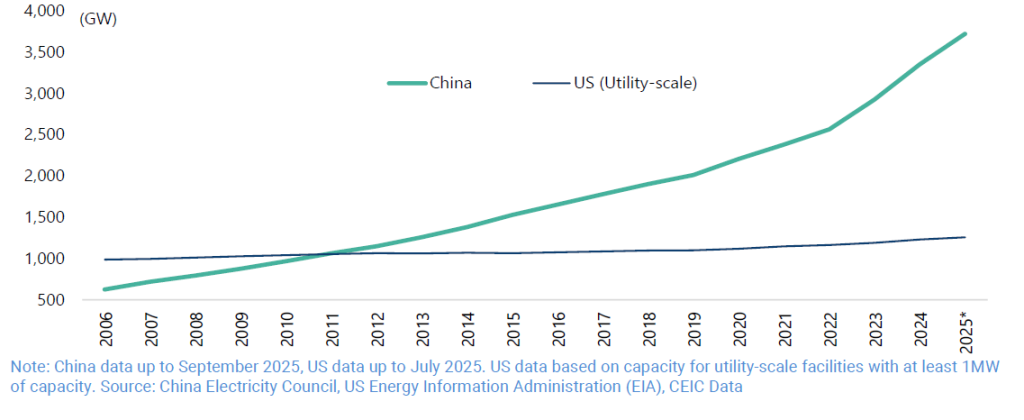

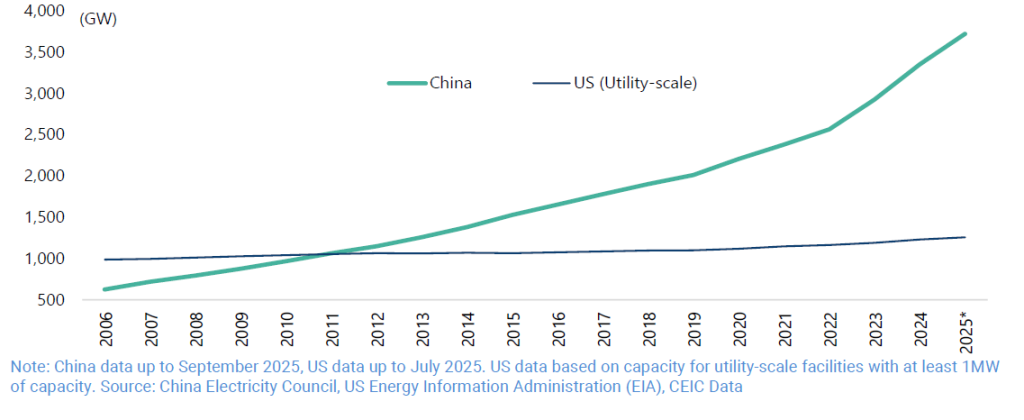

China, by contrast, is turning its state-led muscle into an AI advantage. In the first nine months of 2025, China added 240 GW of solar power—more than the entire installed solar capacity of the U.S.

China and U.S. (utility scale) electricity generation capacity (stock). This is where the real power lies

Source: China Electricity Council, US Energy Information Administration (EIA)

According to OpenAI’s open letter to the White House, China added 429 GW of new power capacity in 2024 alone. That’s more than one-third of the entire U.S. grid, built in a single year.

And the state isn’t just expanding power. It’s subsidising it.

And it’s not just about keeping the lights on — it’s about rerouting the entire tech stack. With U.S. export controls choking access to Nvidia’s best chips, China is doubling down on homegrown hardware. That energy advantage is now powering a new playbook: build your own chips, subsidize their use, and cluster them like there’s no tomorrow.

Powering the Home Team

Beijing’s strategy is clear: if you can’t buy the best chips, out-build the system around them.

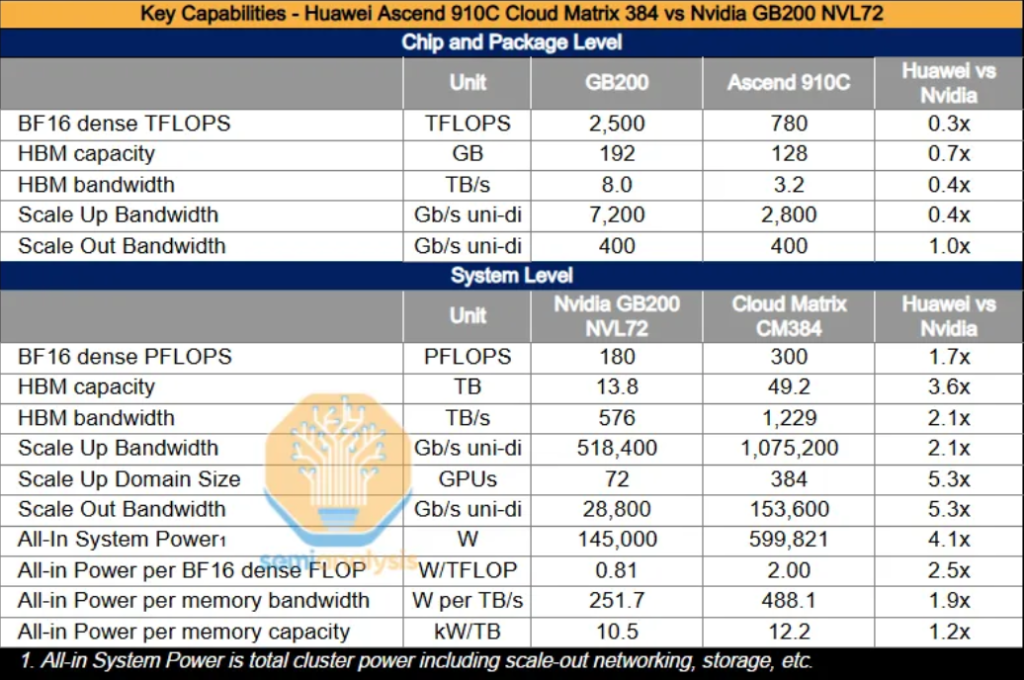

With Washington tightening export controls on Nvidia’s AI hardware, Chinese tech giants found themselves cut off from the gold standard. So Huawei stepped in with a workaround: not a better chip, but a bigger cluster.

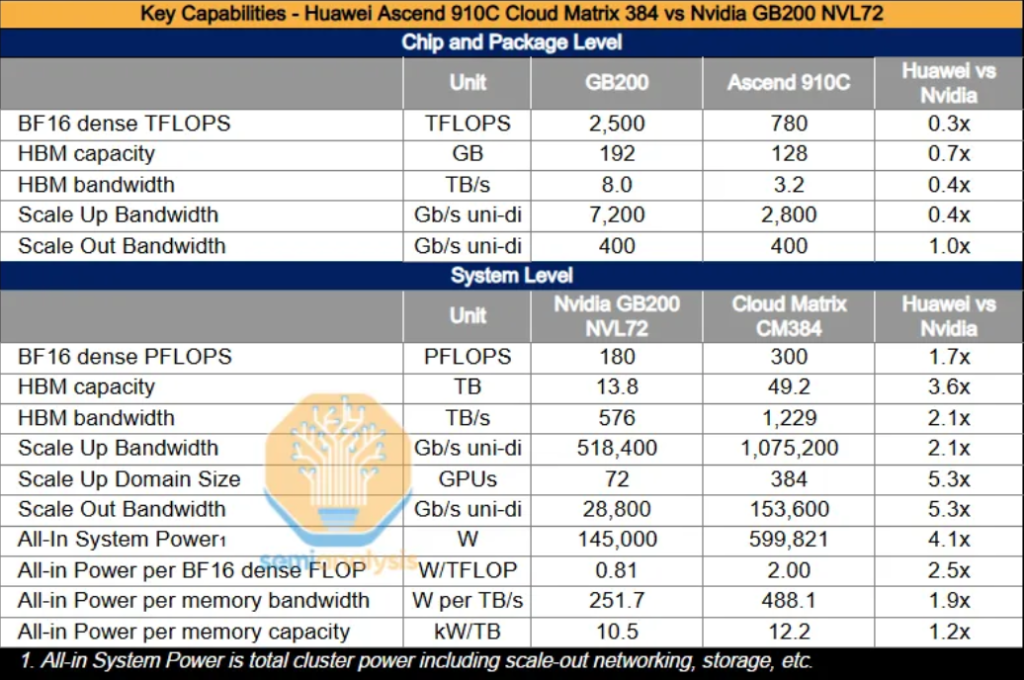

Its new CloudMatrix 384 system links together 384 of its Ascend 910C processors, compensating for weaker single-chip performance with brute-force architecture and advanced networking. The result? A cluster Huawei claims outperforms Nvidia’s flagship NVL72 on compute and memory, even if it gulps far more power and demands more manpower to maintain.

Source: SemiAnalysis, Nvidia, Huawei

A full CloudMatrix setup now delivers 300 PFLOPs of dense BF16 compute. This is nearly twice the performance of Nvidia’s GB200 NVL72. With over 3.6 times the total memory capacity and 2.1 times the bandwidth, Huawei isn’t just catching up. It’s building an AI system that can go toe-to-toe with Nvidia’s best.

And here’s where the state steps in again not just with chips, but with cheap juice.

Local governments in provinces like Gansu, Guizhou, and Inner Mongolia are now offering power subsidies that slash electricity bills by up to 50%, but only if you’re running domestic chips. That’s on top of cash incentives generous enough to cover a data centre’s operating costs for a year.

The logic is brutal but effective: reduce Chinese firms’ dependency on Nvidia and make up for the efficiency gap with fiscal firepower. Huawei’s chips may consume 30–50% more electricity than Nvidia’s, but if the power is cheap enough, it doesn’t matter.

Crowd gathers to admire Huawei’s Ascend-powered CloudMatrix 384 system at the 2025 World AI Conference in Shanghai.

Source: South China Morning Post, Xinhua

And the power is, in fact, cheap. Thanks to China’s centralised grid and abundant power in remote provinces, average industrial electricity costs in these subsidised regions hover around 5.6 cents/kWh — a steep discount to the 9.1 cents/kWh U.S. average. Add a surplus of engineers, and the equation tilts further in Beijing’s favour.

CloudMatrix clusters are now being delivered to data centres serving top-tier Chinese tech clients. They aren’t just Plan B — they’re a parallel track. Built differently, powered locally, and scaled with state backing.

Final Thoughts: The Next Moat Is Physical

The Western narrative still sees AI dominance through the lens of software and algorithms. But the deeper we go, the more this becomes a game of logistics, power grids, and vertically integrated industrial policy.

China’s AI build-out shows what happens when you align energy strategy, chip design, and capital deployment. The result isn’t the most beautiful AI system. It’s the most deployable one.

For all the talk of exponential models and general intelligence, the ceiling of progress may come down to simple things: copper, electricity, trained labour, and land.

We’ve said it before in The Case for Copper: in a rewired world, it’s not software that limits us—it’s the metals behind the curtain. And AI is now hitting that same wall. Every model, every data centre, every breakthrough depends on the silent metals that carries the current.

As we like to say at Heyokha: When the world scrambles for intelligence, don’t forget who owns the grid.

Tara Mulia

For more blogs like these, subscribe to our newsletter here!

Admin heyokha

Share

When people talk about artificial intelligence, the conversation usually drifts into abstraction including but not limited to models, tokens, algorithms, cognition. But underneath all that magic is a very physical reality: silicon chips, cooling fans, thousands of kilometres of fibre optic cables, and megawatts of electricity coursing through humming data centres.

In fact, as 2025 unfolds, AI is no longer just a story of breakthroughs. It’s a story of build-outs. And increasingly, the real competitive edge doesn’t lie in model architecture or training techniques, but in something much simpler: who can scale electricity, chips, and infrastructure faster.

When Data Meets Voltage

AI carried U.S. equities in Q3. The sector now accounts for nearly 80% of the S&P 500’s year-to-date gains. The capital intensity is staggering: AI-related investments contributed nearly 40% of U.S. GDP growth in 2025. Nvidia’s rally may get the headlines, but the unsung hero of this boom is infrastructure.

Global computing capacity is set to expand massively

Source: McKinsey

Data centres alone are projected to consume 1,600 TWh of electricity by 2035, which is the equivalent to 4.4% of global power use. That would make “AI” the world’s fourth-largest electricity consumer behind the U.S., China, and India.

Compute demand is now doubling every 100 days. Power, water, land, and transmission capacity are no longer cost inputs. They are hard constraints.

Gridlock vs. Greenlight: The U.S. Grid Struggles as China Powers Ahead

This shift is already causing cracks. Microsoft recently admitted that power has become “the biggest issue” for future data centre builds. Amazon is flagging grid shortages, even as hyperscaler capex is projected to jump from US$370 billion in 2025 to US$470 billion in 2026.

In response, U.S. tech giants are building their own generators just to keep expansion plans alive. But between fragmented state grids and a politicised permitting system, scaling energy capacity in America is looking less like innovation and more like improvisation.

China, by contrast, is turning its state-led muscle into an AI advantage. In the first nine months of 2025, China added 240 GW of solar power—more than the entire installed solar capacity of the U.S.

China and U.S. (utility scale) electricity generation capacity (stock). This is where the real power lies

Source: China Electricity Council, US Energy Information Administration (EIA)

According to OpenAI’s open letter to the White House, China added 429 GW of new power capacity in 2024 alone. That’s more than one-third of the entire U.S. grid, built in a single year.

And the state isn’t just expanding power. It’s subsidising it.

And it’s not just about keeping the lights on — it’s about rerouting the entire tech stack. With U.S. export controls choking access to Nvidia’s best chips, China is doubling down on homegrown hardware. That energy advantage is now powering a new playbook: build your own chips, subsidize their use, and cluster them like there’s no tomorrow.

Powering the Home Team

Beijing’s strategy is clear: if you can’t buy the best chips, out-build the system around them.

With Washington tightening export controls on Nvidia’s AI hardware, Chinese tech giants found themselves cut off from the gold standard. So Huawei stepped in with a workaround: not a better chip, but a bigger cluster.

Its new CloudMatrix 384 system links together 384 of its Ascend 910C processors, compensating for weaker single-chip performance with brute-force architecture and advanced networking. The result? A cluster Huawei claims outperforms Nvidia’s flagship NVL72 on compute and memory, even if it gulps far more power and demands more manpower to maintain.

Source: SemiAnalysis, Nvidia, Huawei

A full CloudMatrix setup now delivers 300 PFLOPs of dense BF16 compute. This is nearly twice the performance of Nvidia’s GB200 NVL72. With over 3.6 times the total memory capacity and 2.1 times the bandwidth, Huawei isn’t just catching up. It’s building an AI system that can go toe-to-toe with Nvidia’s best.

And here’s where the state steps in again not just with chips, but with cheap juice.

Local governments in provinces like Gansu, Guizhou, and Inner Mongolia are now offering power subsidies that slash electricity bills by up to 50%, but only if you’re running domestic chips. That’s on top of cash incentives generous enough to cover a data centre’s operating costs for a year.

The logic is brutal but effective: reduce Chinese firms’ dependency on Nvidia and make up for the efficiency gap with fiscal firepower. Huawei’s chips may consume 30–50% more electricity than Nvidia’s, but if the power is cheap enough, it doesn’t matter.

Crowd gathers to admire Huawei’s Ascend-powered CloudMatrix 384 system at the 2025 World AI Conference in Shanghai.

Source: South China Morning Post, Xinhua

And the power is, in fact, cheap. Thanks to China’s centralised grid and abundant power in remote provinces, average industrial electricity costs in these subsidised regions hover around 5.6 cents/kWh — a steep discount to the 9.1 cents/kWh U.S. average. Add a surplus of engineers, and the equation tilts further in Beijing’s favour.

CloudMatrix clusters are now being delivered to data centres serving top-tier Chinese tech clients. They aren’t just Plan B — they’re a parallel track. Built differently, powered locally, and scaled with state backing.

Final Thoughts: The Next Moat Is Physical

The Western narrative still sees AI dominance through the lens of software and algorithms. But the deeper we go, the more this becomes a game of logistics, power grids, and vertically integrated industrial policy.

China’s AI build-out shows what happens when you align energy strategy, chip design, and capital deployment. The result isn’t the most beautiful AI system. It’s the most deployable one.

For all the talk of exponential models and general intelligence, the ceiling of progress may come down to simple things: copper, electricity, trained labour, and land.

We’ve said it before in The Case for Copper: in a rewired world, it’s not software that limits us—it’s the metals behind the curtain. And AI is now hitting that same wall. Every model, every data centre, every breakthrough depends on the silent metals that carries the current.

As we like to say at Heyokha: When the world scrambles for intelligence, don’t forget who owns the grid.

Tara Mulia

For more blogs like these, subscribe to our newsletter here!

Admin heyokha

Share